Wave 0 Construction

This week we installed the first wave of testbed equipment. This includes

- 21 Experiment plane access switches

- 16 Control plane access switches

- 9 Fabric switches

- 2 Spine switches

- 1 Gateway

- 4 File servers

- 5 Network emulation nodes

- 16 Console servers

It starts with a bunch of boxes.

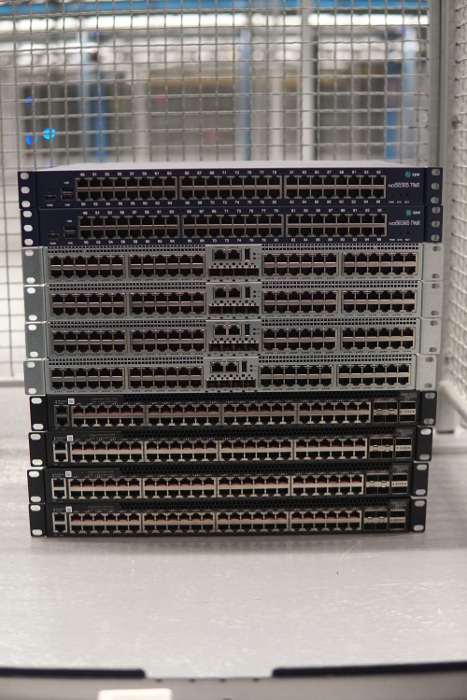

Boxes eventually turn into switches

This is a network testbed, which means we value connectivity - here is just one racks worth of switches. This is for our type-A rack, each type-A rack is home to 192 nodes - each on 3 separate networks

- experiment network

- control network

- console network

The nodes themselves have not yet arrived, but the switches are racked and waiting. The DComp testbed is 11 racks in total.

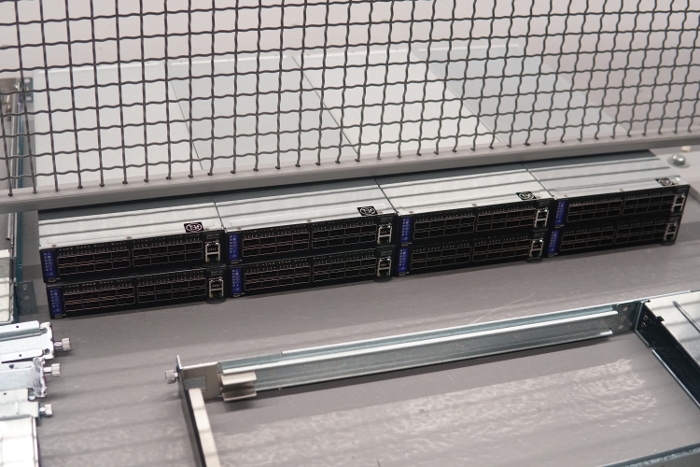

The above switches are access switches that operate at 1G. These eventually are aggregated into a 100G fabric/spine system.

The server machines shown above the switches are the emulation servers. Each is home to two 120G Netronome emulation cards in an AMD Epyc based system.

This round we also got the storage servers installed and fired up. This is a Ceph based system. Each node has 8x 10TB drives for mass storage and 4x 2TB NVME drives for fast image serving.

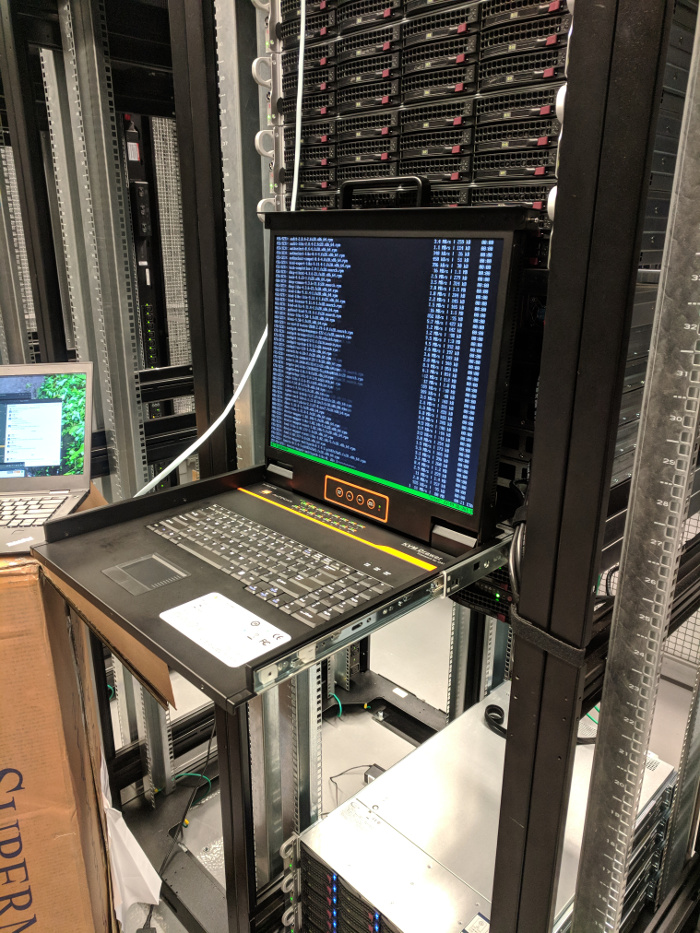

The testbed is plumbed with console servers and KVM access for remote and on-site management.